Drummer Game (2011) is an EAGER-funded project (National Science Foundation, grant no. 0940723) focusing on the implementation of an interactive multimedia spectacle featuring Chinese terracotta soldiers. Two teams consisting of six drummers control six cohorts of terracotta soldiers and issue orders to their respective cohort using a series of rhythmic patterns, each representing a particular command. The goal of the spectacle is simple–beat the other army. Shrink-wrapped into all this are a series of newly developed technologies and their respective research vectors, including GPU-based crowd simulation (led by a collaborator Yong Cao), the development of 3D visual assets (led by collaborator Dane Webster who is also the author of the video linked above), and my contributions: an unconventional approach to analog signal input and analysis from a series of authentic and very much resonant Chinese war drums, including a combination of dynamic time warping and “binpass” FFT filtering and amplitude tracking, and a new situation-aware soundtrack engine that provides seamless and musically satisfying transitions between different states (e.g. attack, retreat, march, stand, win, loss, etc.) by ensuring that all transitions occur on a downbeat, obey the meter and tempo (including changing tempi), and pull transitioning material from a database of appropriate options (based on the combination of starting and ending states).

The two audio-centric components have been encapsulated in a new audio engine codenamed Aegis capable of utilizing audio snippets of varying granularity and supporting database of possible entry points, so as to ensure maximum variety while retaining structural integrity of a precomposed work. Consequently, in addition to the ensuing musical conundrum consisting of six cohorts being led by six drummers, each issuing unique commands to their respective cohort, the entire team is accompanied by a soundtrack that best reflects their current state. The system is also affected by audience input whose cumulative loudness (cheering) and the amount of light generated using their smartphones and other light-emitting devices would influence their team’s overall morale. Although the final production was limited to a more controlled environment, the project yielded a complete scalable and easily expandable audio engine realized in MaxMSP with supporting content. Another challenge posed by the project was the use of authentic Chinese war drums whose resonance resulted in profuse signal cross-contamination through sympathetic vibration and called for a careful consideration of alternative signal capture techniques.

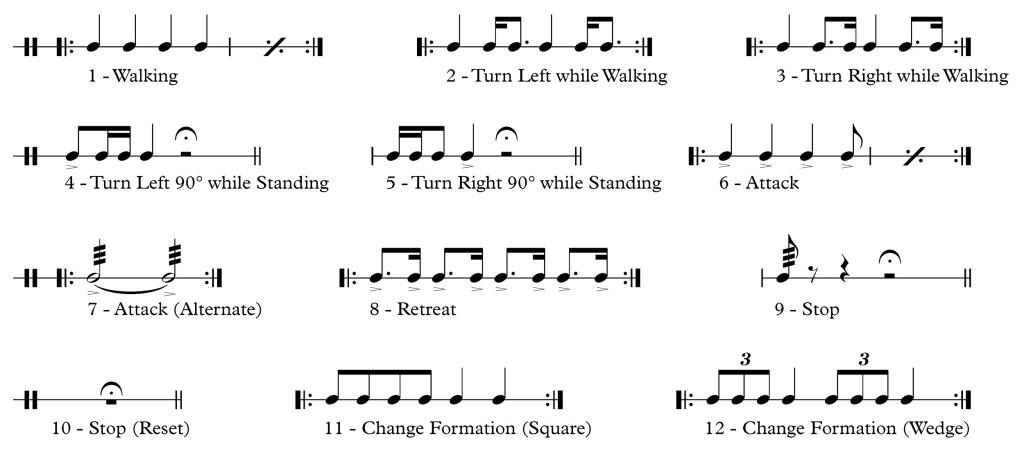

Above, you can see a list of patterns the initial engine was capable of recognizing, and below are two musical excerpts–one showing transitions between various states using musically appropriate transition material, and the second one being a parody that uses silly, out-of-context transition material instead in order to clearly delimit transition boundaries and showcase their meter sync, as well as the strong beat awareness. Musically, soundtrack snippets were composed as placeholders with the primary goal of testing and assessing audio engine’s capabilities. They were put together with contributions from two of my undergraduate research assistants, Michael Matthews and Jonathan Utt.

Research Team:

Ivica Ico Bukvic (Co-PI)

Yong Cao (VT)

Dane Webster (VT)

Francis Quek (VT, PI)

Listening Examples:

Normal transitions

Parodied transitions

Research Publications: