In preparation for Virginia Tech’s inaugural presence at SXSW 2016 expo in Austin, Texas, I was invited to serve on the University-level task force whose goals was to identify content for a generously-sized booth. As a result, I introduced a University-wide survey to collect appropriately developed and engaging content. Through this process I became familiar with a large number of projects and initiatives with a high visibility potential. More importantly, I was introduced to the work of a faculty and soon-to-be colleague and collaborator Dr. Lynn Abbott whose research in part focuses on recognizing and categorizing human facial expressions with specific focus on helping autistic individuals master recognition of emotions through facial expressions. When I learned that the OPERAcraft was one of the selected projects to be highlighted at SXSW, I approached Lynn to see if there was a way to pursue collaborative convergence and research consolidation.

The end result was a new project titled Cinemacraft and its installation-centric variant Mirrorcraft. It builds on the success of the OPERAcraft and pushes the immersion further by incorporating Kinect HD feed designed to track both body and facial expressions and map them onto the Minecraft avatar. During the intensive 3-month development, the project engaged 4 undergraduate students, one masters student, and one post-doc. The above video showcases a January 2017 iteration based on Minecraft.

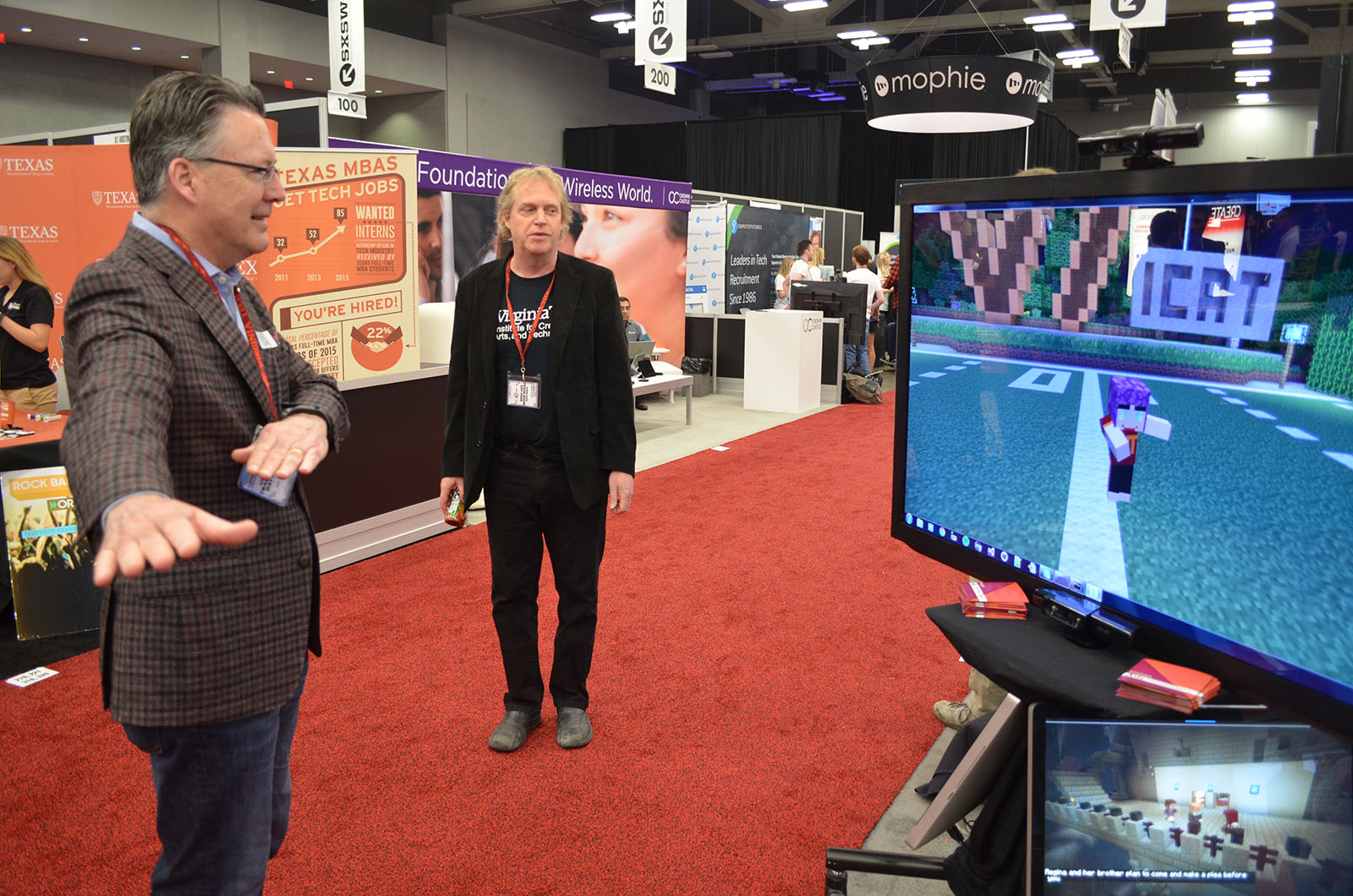

The expo was a great success with thousands of visitors having an opportunity to explore Mirrorcraft and other amazing creations by the Virginia Tech scholars. Even Virginia Tech President Tim Sands took a moment to play with the installation. Yet, due to complex licensing stipulations associated with reverse engineering the Minecraft source code, the project has since transitioned to the free and open-source alternative titled Minetest as its new platform of choice. In addition to porting the OPERAcraft‘s features, the new system is also designed to further enhance the facial tracking and lip syncing by employing contextual sensory fusion. For instance, if a person’s mouth is open but they are making no sound, the Kinect HD’s feed takes precedence over the audio-based lip-sync algorithm. On the other hand, if a person is making sound and the mouth is moving, then the lip-sync takes precedence over the Kinect HD observed mouth position due to its significantly lower framerate capacity, etc.

The newfound platform is one step closer to an immersive real-time collaborative machinima that conveniently side-steps the uncanny valley using tried Minecraft-like aesthetics. And while this project is unlikely to reach the capabilities of the recent impressive advances in real-time machinima, the likes of GDC 2016’s Hellblade, I see it carving its own niche, much like Minecraft did, catering to an entirely different audience with different needs and expectations. Once again, I find myself particularly curious about the project’s outreach and K-12 education potential I am eager to explore further.

Shows:

- SXSW 2016

- 2016 ICAT day

- Science Museum of Western Virginia (February 2017-present)

- Virginia Tech Visitor Center (pending)

Research Publications:

Software: