Even though the developer version of Google Glass is all but dead, the opportunities this visionary piece of hardware has offered remain largely untapped. In 2014, having joined Google Glass developers, I set out to explore hardware’s performative potential and taking L2Ork one step closer to that elusive dream of making all the supporting technology wearable and essentially invisible. In an ideal world, I envision L2Ork performance featuring seemingly technology-less humans who through the power of their own choreography are not only capable of producing sound, but also (much akin to acoustic instruments) feeling the same through the use of haptic as well as visual feedback. Despite staggering progress, we are still far from this ideal goal–today’s wearable technology is neither small nor powerful enough for this to be a reality. Yet, Google Glass offers that next step towards shedding laptop screens in favor of near invisible head-mounted display, something that has proven particularly useful considering L2Ork‘s choreography-centric performance practice, where due to body motion and/or posture keeping screen in performer’s sight is often impractical, if not outright impossible.

When it comes to digital instrument parameter monitoring and score following, L2Ork has no standard user interface (UI). After all, every composition calls for a different score, a different approach to score interpretation and ensemble synchronization, and consequently a different set of widgets. As such, my student Spencer Lee and I set out to build an enhanced version of Pd-L2Ork‘s IEMgui-compatible widgets that can be instantiated and modified over a network socket.

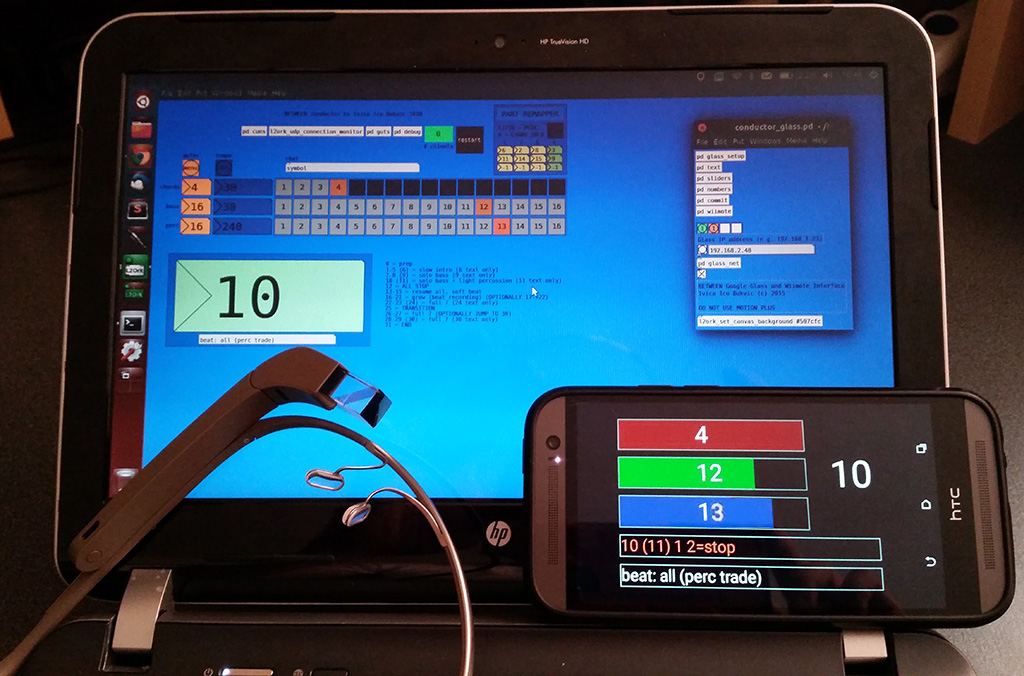

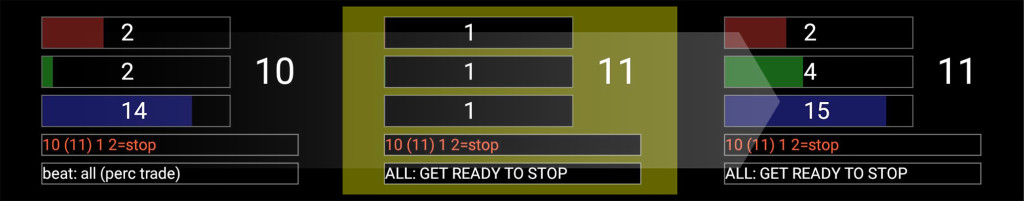

L2Ork Glass (also known by its working title Glasstra) is a simple Google Glass application offering a set of widgets for data monitoring with easily reconfigurable UI, all of which is created, modified, and deleted over TCP and/or UDP network sockets using Pure-Data’s FUDI protocol. Widgets are fully customizable and include alpha color channel that allows for more complex layering. As a result, Glasstra is capable of projecting context-aware UI, whose layout and function can be easily altered on-the-fly. The photo above and screenshots below showcase the UI specially written for L2Ork‘s recent work Between that was performed using Google Glass as part of the SEAMUS 2015 national conference. To get me ever so closer to my ideal goal, I conducted the piece using Google Glass and a Wii Nunchuk that was hidden in my right hand and connected to a Wiimote strapped to my forearm, so that the controller was essentially invisible to the audience and yet offered haptic feedback using Wiimote’s rumble pack (I used a similar controller approach in iteration13 and a performance version of FORGETFULNESS). This allowed me to have a full freedom of motion with my arms, while still being able to observe the score and control the ensemble’s networked system.

Glasstra‘s applicability extends well beyond L2Ork and I can imagine it being used in an array of other scenarios where UI layout may need to be altered remotely in real-time to optimize its usefulness within an evolving context. Likewise, I see it as a powerful Google Glass rapid prototyping environment where one can adjust and (re)assess UI’s impact on-the-fly. Playing with the interface has also taught me some interesting lessons as to how to optimize limited screen estate by leveraging widget size, density, and layout, and thereby providing maximum amount of useful information without overwhelming the user, while also leaving room for those time-critical alerts to come through. Hopefully, in the not too so distant future, there will be a more affordable version of Google Glass that will allow for the entire ensemble to supplant static laptop screens in favor of near-inconspicuous head mounted displays.