In 2013, a collaborative team led by the ICAT director Dr. Ben Knapp was awarded an NSF grant for an innovative infrastructure to be embedded throughout the newly introduced Moss Arts Center. I served in a Co-PI role focusing on the aural aspects of the project.

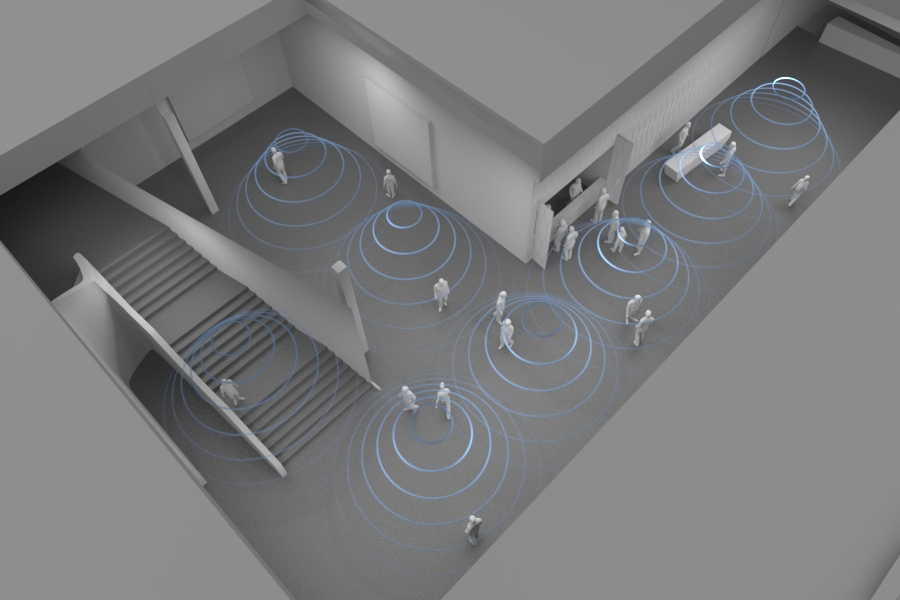

Mirror Worlds was envisioned as persistent infrastructure designed to monitor user activity within the physical building and its virtual counterpart. Its implementation consists of an array of fish eye cameras, a microphone array, and a series of “stations”, namely large wall-mounted tablets that pose as virtual “mirrors”. The infrastructure is envisioned to serve as a bridge between the real and virtual agents traversing the space, allowing for the agents to interact across the virtual and real divides. In that respect the aforesaid “mirrors” instead of literally mirroring passers by through a webcam or a similar camera technology focus on rendering their presence in the virtual rendition of the building that is accessible online.

My work focused on two things: Mirrorcraft project (a subset of what later became known as Cinemacraft) designed to provide real-virtual mirrors using a custom Minecraft mod in conjunction with Kinect HD, and a means to quadrilateralize sound sources within a space in real-time. The latter led to an efficient implementation within MaxMSP that continues to be developed as we explore optimal beam-forming strategies. The ultimate goal of the project is sensory fusion that combines visual blob tracking with aural quadrilateralization and thereby improve fidelity of tracked data and minimize artifacts inherent to either of the two approaches when used in isolation.

Research team:

Ivica Ico Bukvic (Co-PI)

Yong Cao (VT, Boeing)

James Ivory (VT)

Benjamin Knapp (VT, PI)

Nicholas Polys (VT)

Research publications: