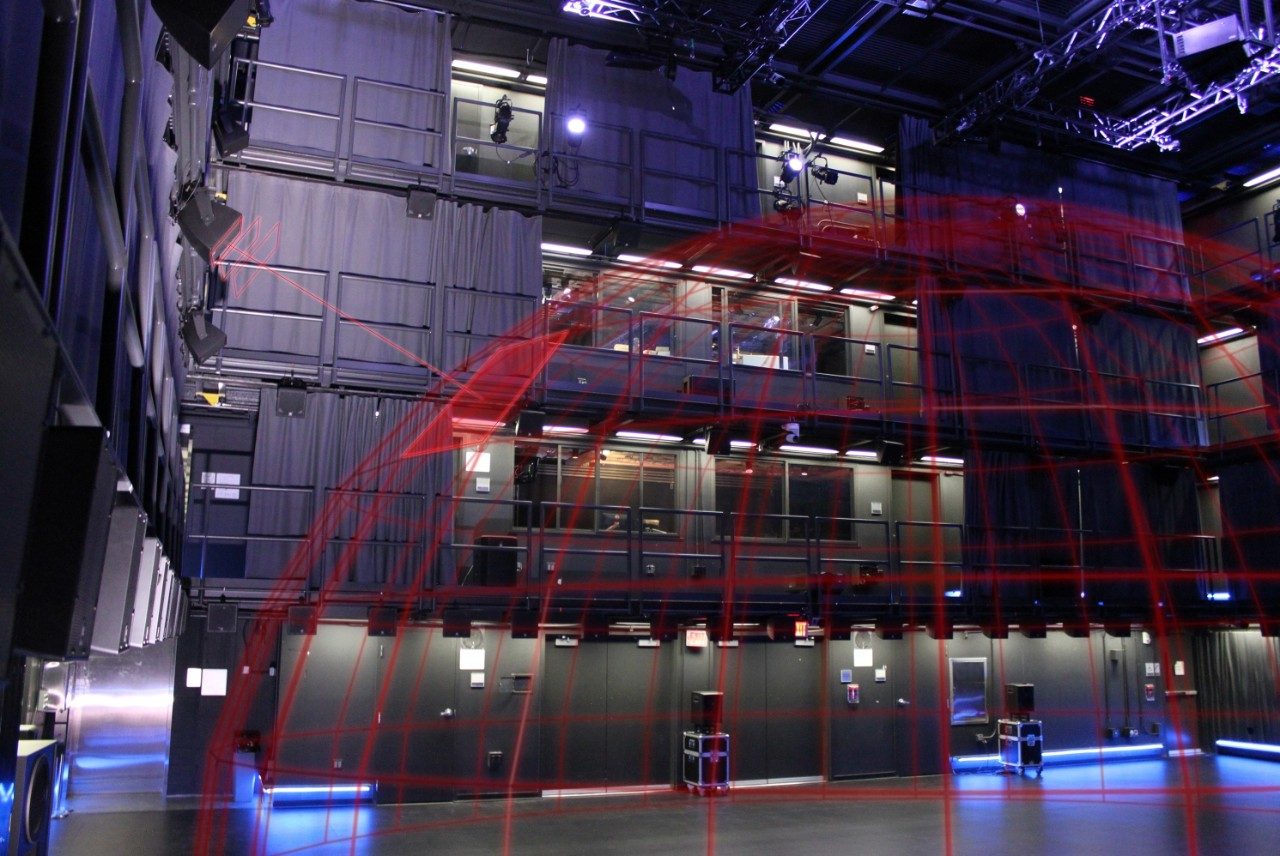

In August 2017, the Spatial Audio Data Immersive Experience (SADIE) project received $149,930 in funding from the National Science Foundation. The goal of this exploratory study was to minimize risks associated with the study of immersive data sonification, a promising nascent field in need of ground truths. The project’s deliverables included a malleable infrastructure and a collection of pilot studies designed to highlight the newfound immersive sonification research trajectories. The ensuing SADIE system builds upon the Virginia Tech Cube‘s infrastructure. It is designed to offer a level playing field for the study of various spatialization techniques in immersive scenarios. Further, it leverages intuitive interaction techniques and spatialization approaches that limit idiosyncrasies and therefore noise in the ensuing study design and user performance data.

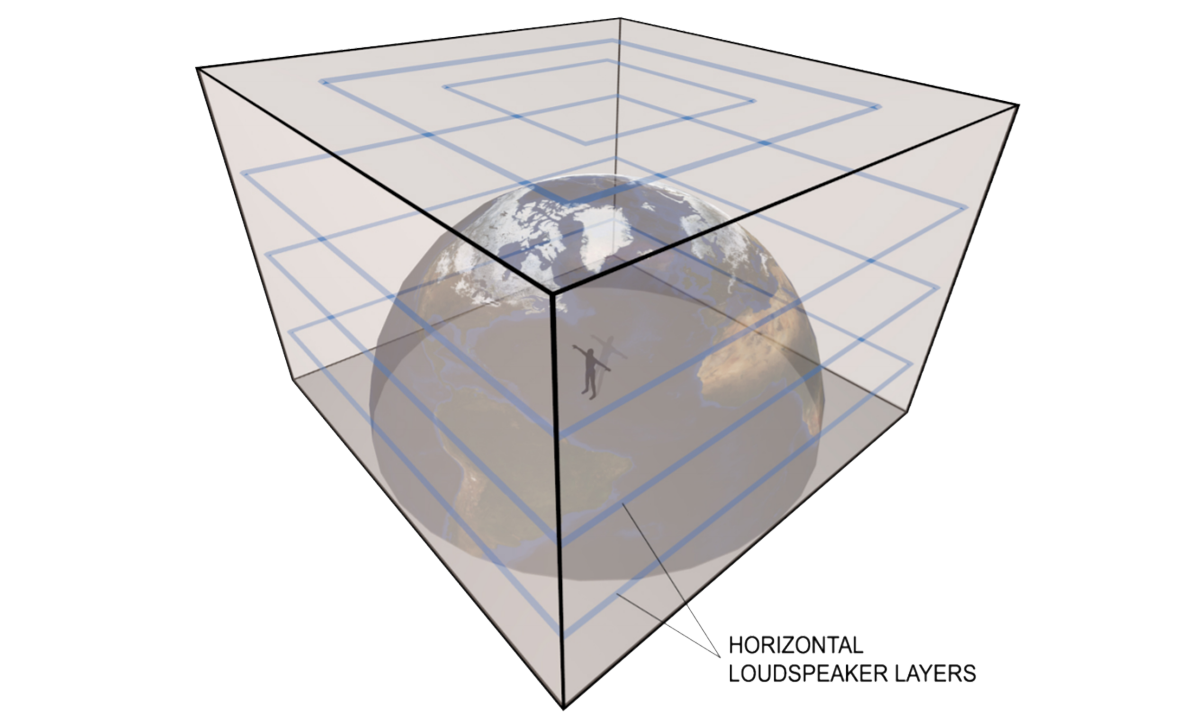

A critical part of minimizing the aforesaid idiosyncrasies is also the use of inherently spatial geospace data and thereby sidestepping a potential need for the arbitrary spatialization of such data. In addition, the geospace sciences were identified by my collaborator and space science expert Dr. Gregory Earle as an area in need of better, spatially aware ways of searching for potential patterns among the inherently multivariate data. As a result SADIE’s primary motivation is to serve as a platform for the observance and study of Earth’s orbital data distributed across the Cube‘s perimeter–imagine taking hemisphere worth of multivariate data set distributed across the Cube with one or multiple observers traversing the equatorial cross-section (Cube‘s floor) and studying the ensuing immersive soundscape in search of new correlations.

Central to SADIE is its focus on the Exocentric Environment. Instead of trying to create a perfect spatial image that is resistant to listener’s position commonly associated with the Egocentric approach, the newly coined Immersive Exocentric Sonification embraces location-specific perception imperfections and sources that emanate exclusively from the Cube‘s perimeter as a means to attain greater spatial clarity. By doing so, the system is unencumbered by the potential idiosyncrasies of a listening “sweet spot” or virtual sources within the space that dissolve as soon as one moves outside the aforesaid “sweet spot”.

Another critical component of the SADIE system is the D4 library. Apart from its ability to efficiently utilize a large number loudspeakers or High Density Loudspeaker Arrays in real-time using the Layer Based Amplitude Panning algorithm (LDAP), D4 also offers first-of-a-kind spatial mask. Akin to an image mask commonly used in graphic design and most notably Photoshop, the spatial mask allows for complex spatialization patterns that go beyond point sources and spherical source spread or radius.

SADIE utilizes an array of software and hardware, including Cube‘s 24 Qualisys motion tracking cameras, a custom-built glove-based interface, and the Cube‘s 130-loudspeaker system. The Qualisys data is forwarded to a custom Unity program designed to parse data and capture critical vectors and points of intersection, so that we can identify with sub-millimeter accuracy where a user may be pointing with their finger. This in turn allows us to accurately measure users’ ability to track aural source(s) as they occur around the Cube‘s perimeter. The resulting data is piped to a MaxMSP where the data is compared to what has been generated by the D4 library. The inverse of the same interface has already been used in artistic scenarios to intuitively drive the interactive spatialization of the aural content.

SADIE further allows for integration of other spatialization approaches, starting with a virtual binaural spatialization system. This has allowed for the pilot study to compare such virtual system to the physical (LDAP) requiring only the minimal set of adjustments, most notably the need to use headphones as opposed to the physical system. In parallel, SADIE has allowed for the comparison of the more traditional Egocentric approaches to the study of human spatial aural capacity to that of the newly proposed Exocentric approach that reflects the way we intuitively interact with the world.

With a number of publications currently under submission, I will update this area on the new findings as they are published. Further, to facilitate research in the area of the Immersive Exocentric Sonification the team’s goal in the near future is to make the SADIE software infrastructure freely available.

Research Team:

Ivica Ico Bukvic (PI)

Gregory Earle (Co-PI, VT)

Research Publications:

- I. Bukvic, G. Earle, *D. Sardana, and W. Joo, “STUDIES IN SPATIAL AURAL PERCEPTION: ESTABLISHING FOUNDATIONS FOR IMMERSIVE SONIFICATION,” International Conference on Auditory Display, Newcastle upon Tyne, United Kingdom, 2019. [international]

- *D. Sardana, *W. Joo, I. I. Bukvic, and G. Earle, “Introducing Locus: a NIME for Immersive Exocentric Aural Environments,” New Interfaces for Musical Expression, Porto Alegre, Brazil, 2019, pp. pending. [international]

- I. Bukvic, “Introduction to Sonification,” in Foundations in Sound Design for Embedded Media, M. Filimowicz, Ed. Routledge, 2019.

- I. Bukvic and G. Earle, “REIMAGINING HUMAN CAPACITY FOR LOCATION-AWARE AUDIO PATTERN RECOGNITION: A CASE FOR IMMERSIVE EXOCENTRIC SONIFICATION,” International Conference on Auditory Displays, Houghton, Michigan, 2018, pp. 153-159. [international]